Effective AI Requires Constant Monitoring, AI Leaders Say

Refining and reviewing data models is essential for impactful application of artificial intelligence.

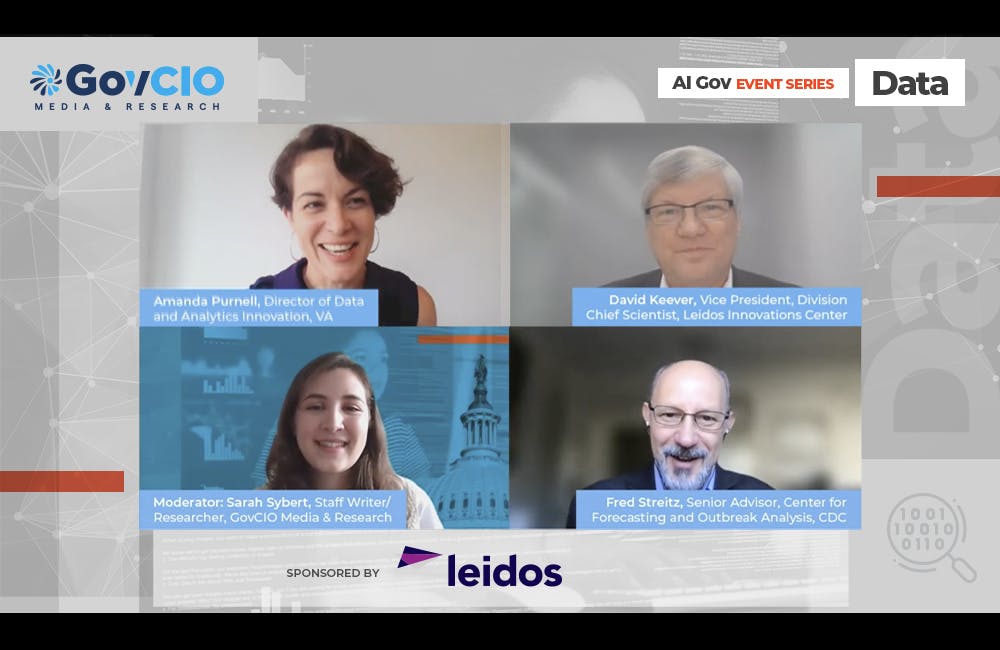

Federal data leaders expounded on their testing strategies for artificial intelligence (AI) models to ensure accuracy and relevancy at GovCIO Media & Research’s AI Gov: Data event Thursday.

AI models are generally only as effective as the data used to uphold them. In cases where data is not continually refreshed, or its limitations unaddressed, conclusions may be inaccurate. Sometimes even if the data is accurate and current, AI models can fail to account for discrepancies or variations in data and produce flawed results.

The Department of Veterans Affairs has made recent investments in AI and uses advanced modeling for public health and biomedical research. Data leadership overseeing these initiatives have become increasingly attuned to the need to review their models for biases, and noted the potential limitations of a particular data set to ensure the results are interpreted and applied correctly.

“You really do need to test models – systematically, strategically, over and over again, with different sub populations and even with different VA hospitals,” said Amanda Purnell, VA Director of Data and Analytics Innovation, at the event. “So there is no one model for the VA. That wouldn’t work. Because people are different by region, different by area, different by health condition. So we have to continuously test and retest models. And we have the computer power to do this incredible work with reconfiguring, readjusting, developing specialized and tailored models for different groups of people.”

Other health and science-focused agencies have worked to apply similar review standards to their AI projects, including the Centers for Disease Control and Prevention’s (CDC) efforts to model the spread of COVID-19. This requires large-scale recalibration when new variants emerge to ensure projections account for the virulence and pathology of new strains.

“There is no single model that is applied. We apply a model which is usually trained on a specific set of data to address a specific question,” said Fred Streitz, Senior Advisor at the CDC Center for Forecasting and Outbreak Analysis, at Thursday’s event. “And those models are recalculated constantly, and the turnaround on that is hours or days. So as new variants emerge, we respond to that very quickly and retrain models as necessary to address specific questions. But there is no there is no one model to rule them all. There is no one forecast that’s absolutely correct. Everything is in the context in which it was built and the information that went into the model.”

The unifying process behind all these initiatives is ongoing review of data inputs, which helps ensure the integrity of AI models’ conclusions.

“This is an iterative process and models get built and used by individuals, but there’s this continuous monitoring of the models that also needs to occur,” said David Keever, Vice President and Division Chief Scientist at the Leidos Innovations Center, at Thursday’s event.

This is a carousel with manually rotating slides. Use Next and Previous buttons to navigate or jump to a slide with the slide dots

-

Agencies Tackle Infrastructure Challenges to Drive AI Adoption

Federal agencies are rethinking data strategies and IT modernization to drive mission impact and operational efficiency as new presidential directives guide next steps.

5m read Partner Content -

Generative AI Demands Federal Workforce Readiness, Officials Say

NASA and DOI outline new generative AI use cases and stress that successful AI adoption depends on strong change management.

6m read -

The Next AI Wave Requires Stronger Cyber Defenses, Data Management

IT officials warn of new vulnerabilities posed by AI as agencies continue to leverage the tech to boost operational efficiency.

5m read -

Federal CIOs Push for ROI-Focused Modernization to Advance Mission Goals

CIOs focus on return on investment, data governance and application modernization to drive mission outcomes as agencies adopt new tech tools.

4m read -

Fed Efficiency Drive Includes Code-Sharing Law, Metahumans

By reusing existing code instead of rewriting it, agencies could dramatically cut costs under the soon-to-be-enacted SHARE IT Act.

5m read -

Agencies Push Data-Driven Acquisition Reforms to Boost Efficiency

New initiatives aim to increase visibility of agency spending, improve data quality and create avenues to deploy solutions across government.

5m read -

Data Transparency Essential to Government Reform, Rep. Sessions Says

Co-Chair of the Congressional DOGE Caucus Rep. Pete Sessions calls for data sharing and partnerships to reduce waste and improve efficiency.

5m read -

AI Foundations Driving Government Efficiency

Federal agencies are modernizing systems, managing risk and building trust to scale responsible AI and drive government efficiency.

40m watch -

Navy Memo Maps Tech Priorities for the Future Fight

Acting CTO’s memo outlines critical investment areas, from AI and quantum to cyber and space, as part of an accelerated modernization push.

5m read -

DOD Can No Longer Assume Superiority in Digital Warfare, Officials Warn

The DOD must make concerted efforts to address cyber vulnerabilities to maintain the tactical edge, military leaders said at HammerCon 2025.

4m read -

New NSF Program Cultivates the Future of NextG Networks

The agency’s new VINES program looks to tackle key challenges like energy efficiency and future-proofing wireless tech.

21m watch -

DHA CDAO Spearheads Master Data Catalog to Boost Transparency

Jesus Caban plans to boost DHA's data maturity through a new master data catalog, governance frameworks and inventory of tech tools.

5m read