New EEOC, DOJ Guidance Targets Hiring Discrimination in AI Tech

The effort aims to mitigate discrimination and bias in algorithm, AI and software used in hiring processes.

Using emerging technology like AI in the hiring process is often met with concerns about bias, especially as agencies work toward improving equitable services.

The Equal Employment Opportunity Commission and Justice Department released Thursday new guidance that seeks to mitigate disability discrimination when employers use the technology for hiring and employment decision-making.

The documents note that algorithms, AI and other software used in hiring processes and screening often discriminate against job applicants with disabilities. These include technologies such as computer-based tests to gauge applicant’s skills and abilities, evaluate applicants’ resumes and having AI assess online video interviews.

EEOC and DOJ will enforce the guidance according to the Americans with Disabilities Act — a federal law that prohibits employers and companies from discriminating on the basis of disability. It will require all employers from the public and private sectors to comply.

“At the Justice Department, we are committed to using the ADA to address the barriers that stand in the way of people with disabilities who are seeking to enter the job market,” DOJ Civil Rights Division Assistant Attorney General Kristen Clarke said in a media event. “As our technology continues to rapidly advance, so too must our enforcement efforts to ensure that people with disabilities are not marginalized and left behind in a digital world.”

The guidance follows EEOC Chair Charlotte Burrows’ AI and Algorithmic Fairness Initiative launched last year to ensure that emerging technologies applied in hiring decision-making comply with federal civil rights laws.

“According to the U.S. Bureau of Labor Statistics, individuals with disabilities are facing unemployment rates almost twice as high as other workers who do not have disabilities,” Burrows said. “We know that over 80% of employers are beginning to use AI in some form in their broader work and their employment decision-making, so we thought it was really important to make sure that we helped explain how to ensure that doing that can really comport with civil rights laws instead of becoming a high-tech pathway to discrimination.”

Burrows noted that employers looking to prevent disability discrimination throughout their hiring processes should keep in mind three different red flags across the vendor products they use. The first of these is a lack of reasonable accommodation for prospective applicants with disabilities.

“If the vendor hasn’t thought about [reasonable accommodation], isn’t ready to engage in that — that’s got to be a warning signal,” Burrows said. “One of the things that can happen is in the assessment or whatever evaluation — if everything is automated, it is very difficult for a person with a disability to raise their hand and say, ‘Hey, I need an accommodation.’”

“Screen outs” are the second way in which disability discrimination can occur, Burrows added. This is when algorithms and AI that employers use screens out applicants based on assessments they conduct. These algorithms can, at times, screen out applicants for having disabilities rather than their ability to meet the job requirements, which Burrows noted is a major issue across these technologies.

Third, Burrows said that AI used in hiring processes can also violate the ADA restriction for disability-related inquiries and medical examinations.

“If, for instance, one of the questions that’s being used that employees ask in the automated process is: did you ever apply for workers comp? Well, often typically that’s a disability, but it’s going to reveal that the person had some sort of disability, some sort of injury that left to that,” Burrows said. “It’s a prohibited question, so the employer needs to make sure that that kind of thing is not being folded into the tech.”

To mitigate disability discrimination when using hiring software, DOJ’s guidance recommends testing technologies to evaluate job skills rather that disabilities or other assessments that measure an applicants’ sensory, manual or speaking skills.

Job applicants who feel that their rights have been violated can submit a charge of discrimination to EEOC or a complaint with the DOJ Civil Rights Division.

This is a carousel with manually rotating slides. Use Next and Previous buttons to navigate or jump to a slide with the slide dots

-

IRS Tax Filing Pilot Part of Digital-First Customer Experience Plan

Many taxpayers increasingly expect flexible, easy and self-directed digital interactions, agency leaders said.

5m read -

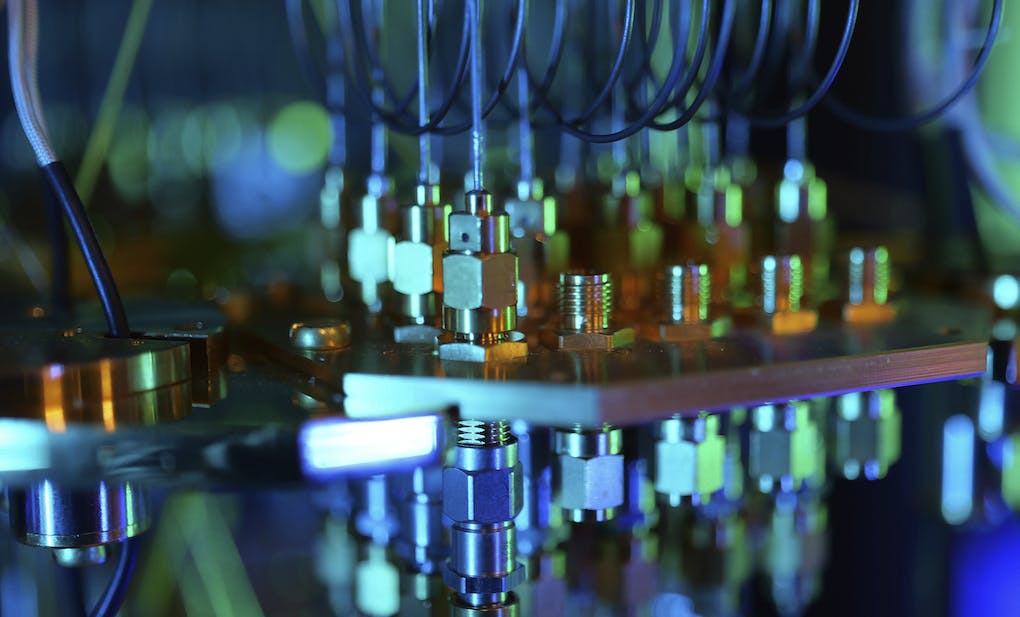

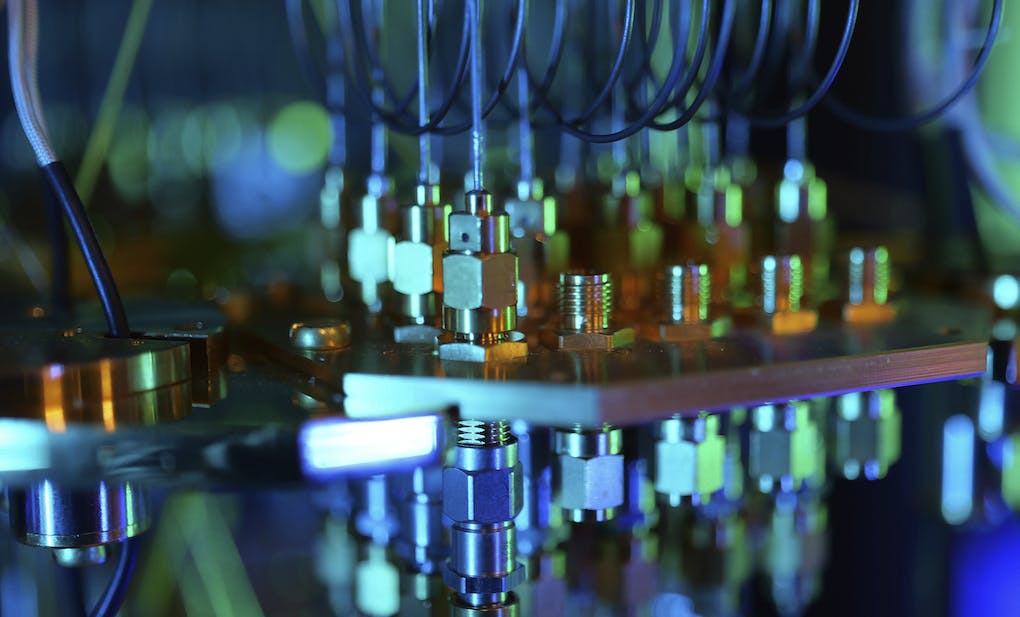

Federal Agencies Make the Case for Quantum

Amid development of emerging technologies like AI and machine learning, leaders see promise in quantum computing.

6m read -

Cyber Incident Reporting Regulation Takes Shape

An upcoming CISA rule aims to harmonize cyber incident reporting requirements for critical infrastructure entities.

5m read -

Connectivity Drives Future of Defense

The Defense Department is strategizing new operating concepts ahead of future joint force operations.

8m read